The Rising Cost of AI Model Training: Why it Matters

Understanding the full financial picture before committing to training large language models (LLMs)

Why Cost Matters

Training and running large language models isn’t just about compute—it’s a major financial commitment that can quickly escalate without careful planning.

The architecture you choose and the hardware you run it on can make or break your AI budget. Model size, layer design, parallelization strategy, and GPU type all have a direct impact on both training and inference costs, often by orders of magnitude.

Without understanding these relationships upfront, teams risk overpaying for performance they don’t need or missing cost-saving optimizations. Our tools help you model these factors before you commit, so every design choice is also a smart financial one.

The Cost Explosion of LLMs

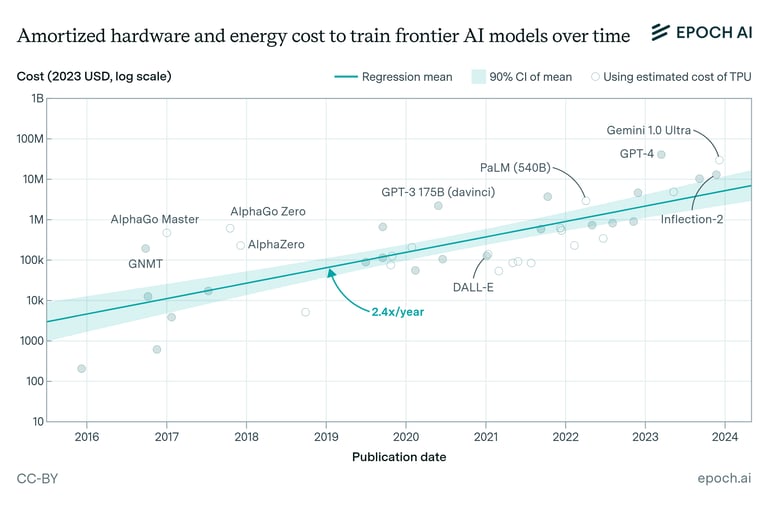

Training state-of-the-art AI models has become dramatically more expensive, with leading systems now costing tens to hundreds of millions of dollars to develop. According to Epoch AI, costs are driven not just by compute, but by increasing model size, massive datasets, infrastructure complexity, and specialized engineering teams.

For teams planning to train or fine-tune large models, understanding this cost curve early is critical—unexpected overruns can mean millions in unplanned expenses and delayed timelines.

Source: Epoch AI, licensed under CC-BY.

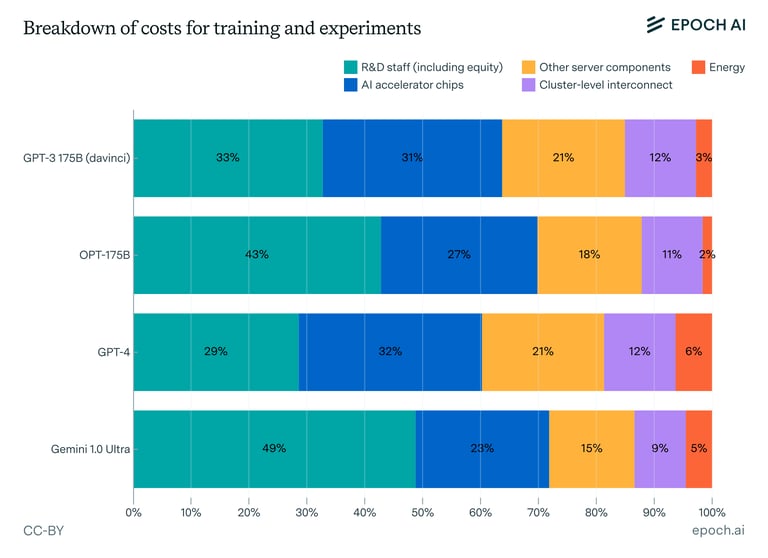

More than Compute - Understanding the TCO

Compute is only part of the picture—R&D staffing, infrastructure, interconnect, and energy can equal or exceed hardware costs. Source: Epoch AI, licensed under CC-BY.

While GPUs and AI accelerator chips receive most of the attention, Epoch AI’s research highlights that R&D staff, infrastructure, energy, and interconnect costs often rival compute. This is why we focus on total cost of ownership (TCO) when assessing LLM budgets, not just hardware spend.

Bottom line: If you’re only modeling GPU costs, you’re missing critical budget factors that determine project viability and long-term ROI.

Ben Cottier, Robi Rahman, Loredana Fattorini, Nestor Maslej, and David Owen. ‘The rising costs of training frontier AI models’. ArXiv [cs.CY], 2024. arXiv. https://arxiv.org/abs/2405.21015.

Contact Costlytic Insights

With AI models growing in complexity and scale, the cost of training and deploying them has outpaced many traditional IT budgets. Whether you are a research lab, startup, or enterprise, proactive cost analysis can be the difference between success and costly missteps.

Contact us today to learn how we help you model, forecast, and optimize LLM costs before you scale.

Support

info@costlytic.tech

Insights

Contact us today to learn how we help you model, forecast, and optimize LLM costs before you scale.

Support

© 2025. All rights reserved.